As security vendors rush to get their LLM copilots (are they all agents now?) out, I haven’t seen a huge focus on designing for a character or personality aiming to delight the users. If I am a security engineer drudging through vulnerabilities or logs doing investigations all day long and I have been sold AI assistance, I better have fun using it - whether it be reviewing fewer event manually, or asking questions to AI chatbot who supposedly knows the answers to help me separate wheat from chaff.

I can hear 👂🏾 the so-called no-nonsense 🧐 types muttering under breath “security is a mission critical battle of tasks that could prevent or respond to an attack, we can’t be having fun”. Studies have shown this mindset often creates stressful environment in security teams, which actually negatively impacts the morale and burns them out rather quickly. Per a recent study, 90% of CISOs are concerned about stress, fatigue, or burnout affecting their team's well-being.

Having used Anthropic’s flagship product Claude for a long while, I can tell you that the main reason I signed up for a paid plan was simply because I like interacting with Claude. It feels personal in an endearing manner, responds to my questions calmly without making me feel like an idiot, and can be concise and verbose based on the situation. Could it be better? Ofcourse, having a humor setting won’t be the worst, and it is a choice that may be presented from time to time or at the time of onboarding. Reminds me of this scene from one of my favorite movies of all time:

Noting these traits about Claude (thanks to Mike Krieger, CPO at Anthropic), I really liked how Anthropic’s team thinks about this:

AI models are not, of course, people. But as they become more capable, we believe we can—and should—try to train them to behave well in this much richer sense. Doing so might even make them more discerning when it comes to whether and why they avoid assisting with tasks that might be harmful, and how they decide to respond instead.

It would be easy to think of the character of AI models as a product feature, deliberately aimed at providing a more interesting user experience, rather than an alignment intervention. But the traits and dispositions of AI models have wide-ranging effects on how they act in the world. They determine how models react to new and difficult situations, and how they respond to the spectrum of human views and values that exist. Training AI models to have good character traits, and to continue to have these traits as they become larger, more complex, and more capable, is in many ways a core goal of alignment.

We want people to know that they’re interacting with a language model and not a person. But we also want them to know they’re interacting with an imperfect entity with its own biases and with a disposition towards some opinions more than others. Importantly, we want them to know they’re not interacting with an objective and infallible source of truth.

Getting beyond general assistance into specialist tasks such as code generation - Augment Code has a personality geared towards thoroughness, precision, and managing complexity in large projects, making it a strong choice for professional engineers working with extensive codebases. Cursor, on the other hand, offers a more fluid and interactive experience ideal for smaller projects or developers who prioritize autocomplete and chat-based assistance but may face limitations with scale.

I am curious who in security is building their AI security agents with these features:

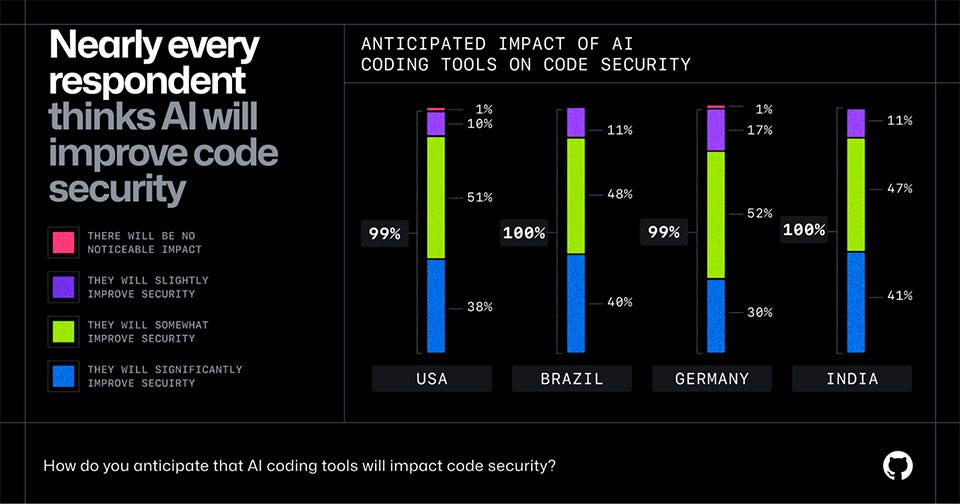

Transparent capabilities, limitations, and risks: I know security vendors are expert at avoiding liability of any kind, and this one is about keeping humans informed of the opportunity but also pitfalls of your product. Yup, no matter how much you’d want to believe otherwise, your models do lie. This is creating a false sense of trust among developers, as reported by Stanford research and a recent GitHub survey that reports the following:

Self-awareness: Current AI tools often present findings with false confidence, leading to either dangerous over-reliance or complete dismissal. Users need transparent uncertainty communication to make informed decisions about when to escalate to human experts. I have seen this sometimes with Claude and ChatGPT for legal discussions. Here are a few examples:

Vulnerability Detection

"I've identified potential SQL injection in your payment processing function. However, I can't assess your WAF rules or runtime protections - please have your AppSec team validate before patching production."Code Review

"This authentication bypass pattern looks critical, but I may be missing business context or compensating controls. Given this handles financial data, consult a certified security engineer before implementation."False Positive Awareness

"Flagging 15 XSS issues in your React app - likely false positives due to JSX syntax. Focus on the 3 server-side instances I've marked HIGH priority first."Scope Limitations

"I can analyze your application code but cannot assess your cloud config, container security, or network controls. For complete security posture, involve your DevSecOps team."Confidence Scoring

"RCE vulnerability detected (confidence: 8/10). High confidence on the deserialization flaw, but I cannot trace if user input actually reaches this code path through your specific API routes."

Chime-in when necessary with balanced conversational feedback: Building trust takes time, and it would be so good to get prompted back with intelligent questions where user may or may not know the answer but enables deep human thinking which results in overall better understanding and outcome.

User: "This SQL injection looks critical"AI: "Agreed on the technical severity. What's the data sensitivity level for this service? That context would help us prioritize this against your other findings."Memory and organizational context: Airbnb’s CISO calls memory the next AI frontier to enable dynamic personalization and adaptive behavior to make model interaction stateful. While the size context window is becoming easier every day as cost of compute goes down, a user can’t possibly provide all possible context to bootstrap a smart conversation. Memory is an unsolved problem at scale today, and we are expected to compromise with the implementation of complex RAG architecture and data pipelines. Here’s an example for Product Security assistant:

Initial Context Bootstrap:

Security Event: Suspicious API activity detected on booking service Analyst: Aria Mittal (Senior AppSec Engineer, Platform Team) Previous Context: Aria has been investigating booking fraud patterns for 3 weeksMemory-Enabled Interaction Flow:

Day 1 - Initial Investigation:

User: "Analyze the anomalous API calls on booking-service-v2"

Agent: Remembers Aria’s expertise level, previous fraud investigations, and Airbnb's booking architecture

Response: "I see elevated POST requests to

/bookings/instant-bookwith unusual geographic patterns. Based on your recent work on the payment fraud ring, this shares similar IP clustering. Shall I cross-reference with the compromised account list from your March investigation?"

Day 5 - Follow-up Analysis:

User: "Any updates on that booking anomaly?"

Agent: Recalls the specific investigation, Aria’s role, team context, and interim findings

Response: "The booking service investigation has evolved. I've identified 847 accounts following the pattern you flagged. The attack vector appears to be credential stuffing through the mobile API endpoint - different from the web-based attacks you typically see. I've already notified Jake's Incident Response team per your usual workflow."

(limited) System prompt control: I have noticed that most embedded AI products within SaaS or agentic applications don’t provide a feature set for enhanced onboarding to help manage experiences better. Users might customize things like "prioritize PCI compliance" or "focus on cloud security posture" without being able to fundamentally alter the assistant's security judgment or bypass safety mechanisms.

Role based interaction: While Agentic Identity is a hot topic, I desire an AI security assistant dynamically adapts its communication style, detail level, and recommendations based on the user's role and security needs. A CISO receives executive summaries with business impact and budget implications, while a Security Engineer gets technical details, code snippets, and remediation steps - all from the same underlying security findings.

I believe this is where the usability of many security products needs to go, and a lot of AI security copilots are no different from poorly designed UIs that we have gotten to expect.

So, while we appreciate a smart and knowledgeable intern, they only get hired in a full-time capacity beyond their hard skill because of their empathy, attitude and personality because they can make challenging situations a lot more easier to manage. Otherwise, they are commodities providing limited utilitarian value not worthy of becoming an agent.

Not quite yet.

What has your experience been with security copilots that have widespread user base and how their character “feels”? What do you desire from your AI security agent beyond problem solving skills? Looking forward to your DM or your comment below on this topic.